Adobe just unveiled a flood of new AI features at Max 2025. The creative software giant is betting big on AI assistants that can edit your designs, generate soundtracks, and even manage your entire social media presence.

This year’s announcements focus heavily on conversational AI agents that understand natural language. Instead of hunting through menus and learning complex tools, you’ll soon tell Adobe apps what you want changed. The software handles the rest.

Let’s break down everything Adobe announced that actually matters.

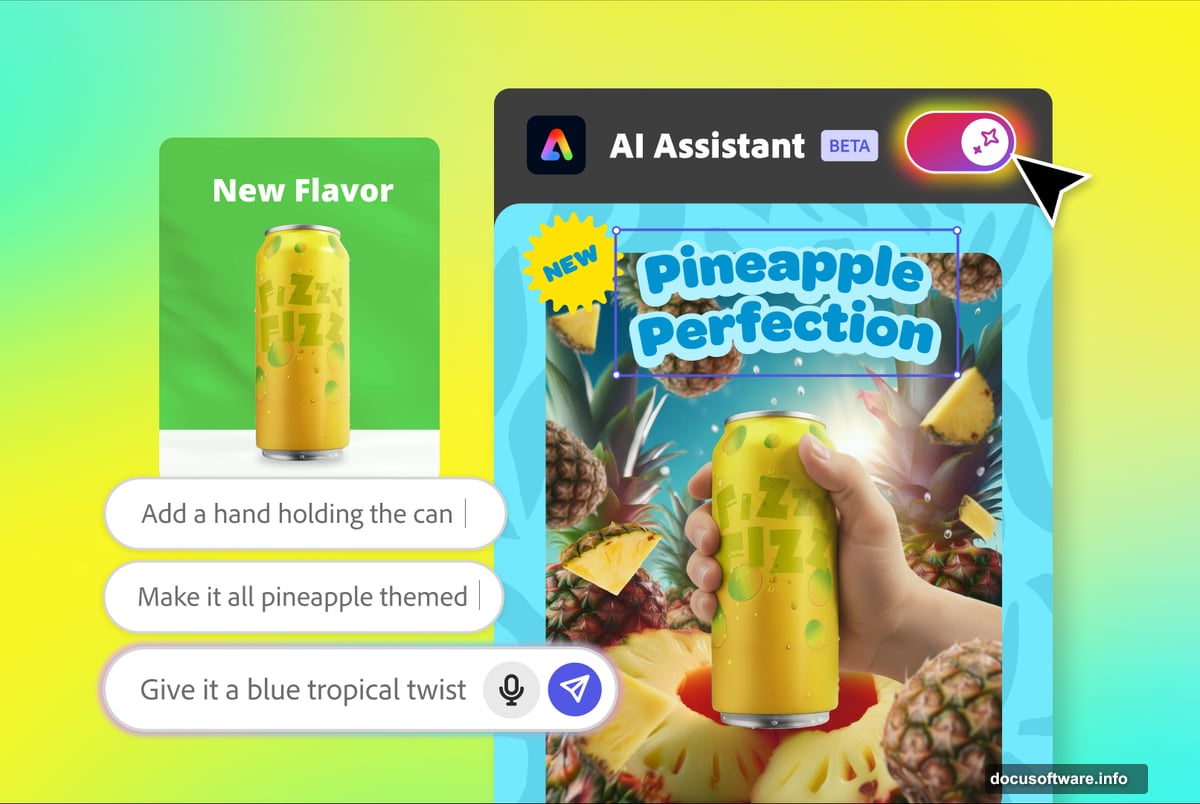

AI Assistants Are Coming to Every Adobe App

Adobe Express got the first AI assistant, launching in public beta today. It replaces the traditional interface with a chatbot that edits designs using plain English descriptions.

The feature works surprisingly well for basic tasks. You describe what you need — like “fall-themed wedding invitation” or “retro science fair poster” — and the assistant generates options. Then you refine with follow-up prompts like “make the text bigger” or “change the color scheme to blue.”

But the live demo revealed some quirks. When asked to add a Halloween costume to a raccoon photo, the AI inexplicably turned it into a cartoon. The presenter seemed just as surprised as the audience. So expect some trial and error with complex requests.

Adobe plans to eventually bring AI assistants to Photoshop, Premiere Pro, Lightroom, and all its other Creative Cloud apps. This represents a massive shift in how Adobe software works — moving from tool-based interfaces to conversation-based editing.

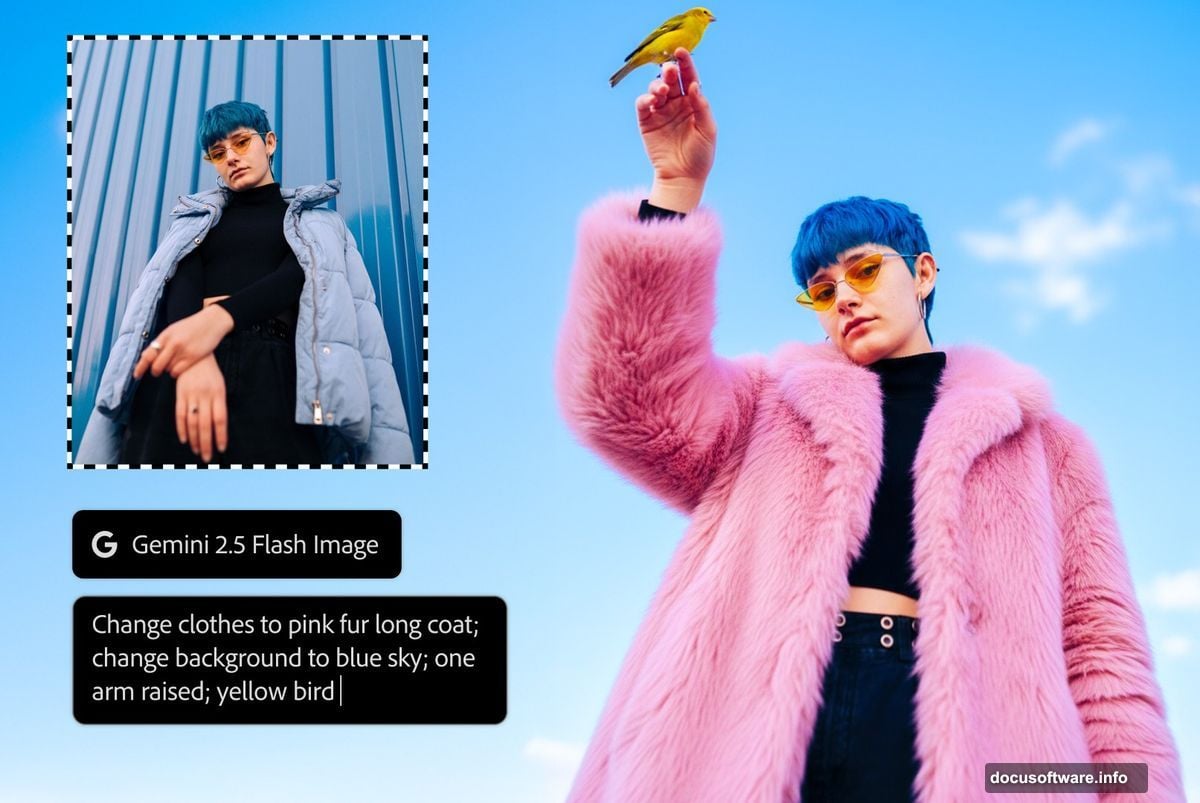

Photoshop’s Generative Fill Gets More Options

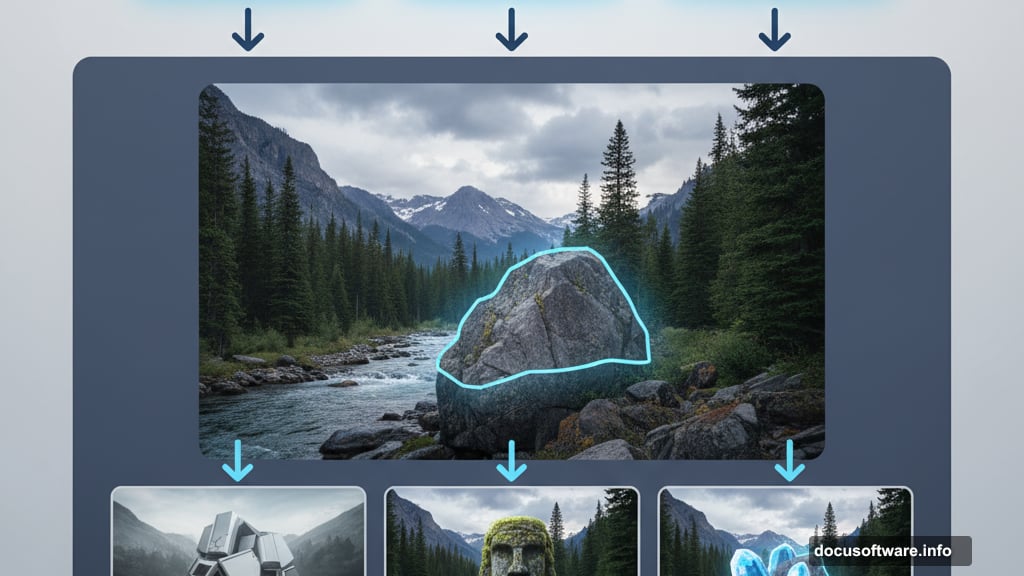

Photoshop’s popular Generative Fill feature now supports third-party AI models. Users can choose between Adobe’s Firefly, Google’s Gemini 2.5 Flash, and Black Forest Labs’ Flux.1 Kontext.

Why does this matter? Different AI models produce different results. One might excel at photorealistic additions while another creates more stylized outputs. Having multiple options means better chances of getting exactly what you want.

The workflow stays simple. Select your image, describe what to add or change, then switch between AI models to compare results. It’s like getting three different artist interpretations of the same request.

Plus, this opens the door for Adobe to add more AI models in the future. The platform approach gives users flexibility while Adobe avoids locking itself into a single AI provider.

Auto-Generated Soundtracks for Your Videos

Generate Soundtrack analyzes your video footage and creates matching instrumental music. The public beta launches in the Firefly app today.

The tool offers genre presets like lofi, hip-hop, classical, and EDM. Or you can describe the desired mood — aggressive, sentimental, upbeat, whatever fits. The AI suggests starting prompts based on your video content, which you can then modify.

Here’s what impressed me. The generated music automatically syncs to your footage. No manual timing adjustments needed. The AI understands scene changes and paces the music accordingly.

This solves a real problem. Finding royalty-free music that matches your video’s vibe takes forever. Generate Soundtrack handles it in seconds, though the quality of AI-generated music varies widely based on complexity.

AI Voice-Overs Without Recording Equipment

Generate Speech creates synthetic voice-overs for videos. Instead of recording narration yourself or hiring voice actors, you type what you want said and the AI speaks it.

Adobe didn’t share many details yet. But expect multiple voice options, tone controls, and probably the ability to match timing to video cuts. Most AI voice generators work this way now.

The obvious use case? Explainer videos, tutorials, and social media content where perfect vocal performance matters less than clear communication. Though AI voices still sound noticeably synthetic for emotional or nuanced dialogue.

Project Moonlight: Your AI Social Media Manager

This experimental feature acts as a creative director for social media campaigns. It’s built on the Firefly platform and integrates with Adobe’s editing tools.

You describe your vision to the chatbot. Project Moonlight then creates images, videos, and social posts that match your existing style and voice by analyzing your previous content.

The pitch makes sense. Small businesses and solo creators struggle to maintain consistent branding across platforms. An AI that learns your style and generates on-brand content saves enormous time.

But here’s my concern. Social media success requires authentic personality and timely relevance. An AI assistant might nail the visual consistency while missing the human touch that makes content actually connect. We’ll see how well it performs in practice.

Premiere Pro Gets Simplified for YouTube Shorts

Adobe announced “Create for YouTube Shorts” — a streamlined editing hub launching soon. It’ll appear both in Premiere’s new mobile app and directly within YouTube.

The hub provides templates, transitions, effects, and tools specifically designed for vertical video. Adobe clearly wants to make Premiere more accessible for creators who find the full app overwhelming.

Smart move. Most YouTube Shorts creators currently use simpler mobile apps like CapCut. Adobe needs to meet them where they are rather than expecting everyone to master professional editing software.

What This Means for Creative Professionals

Adobe is fundamentally changing how its software works. The company is moving from complex tool-based interfaces to conversational AI assistants that understand intent.

This benefits beginners who found Adobe apps too intimidating. Now they can describe desired results instead of learning where every feature lives. But experienced users might find AI assistants slower than direct tool manipulation.

The bigger question? How much creative control are you willing to surrender for convenience? AI assistants make decisions about design, timing, and style based on algorithms and training data. That’s different from making those choices yourself.

Adobe clearly believes most people will trade some control for dramatic speed improvements. They’re probably right for routine tasks. But creative work often requires precise control that AI can’t yet deliver reliably.

The technology is impressive. Just expect a learning curve as you figure out how to effectively direct these AI assistants. Prompt engineering is becoming as important as traditional design skills.