Adobe just showed off something wild at their Max conference. Change one frame of video, and AI updates the entire clip automatically.

No masks. No tedious frame-by-frame editing. Just point, edit, and watch the magic happen across your whole video. Plus, Adobe demoed several other experimental AI tools that make complex editing feel effortlessly simple.

These “sneaks” aren’t released yet. But they offer a glimpse of where creative software is heading. And honestly? The future looks pretty incredible for video editors.

Frame Forward Makes Video Editing Ridiculously Fast

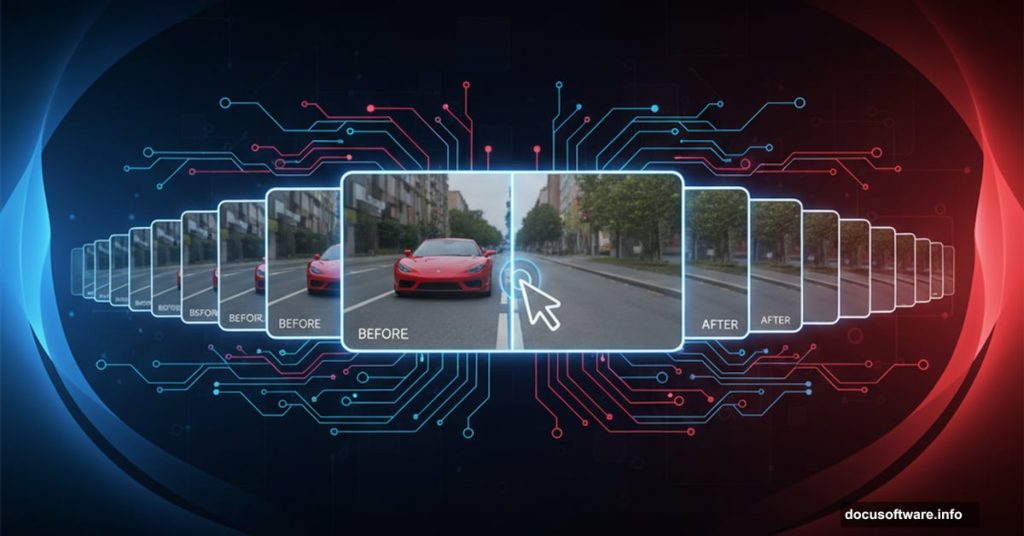

Project Frame Forward is the standout tool. It lets you add or remove anything from video without traditional masking techniques.

Here’s how it works. You select an object or person in the first frame. Frame Forward then tracks and removes that element across the entire video automatically. In Adobe’s demo, they removed a woman from footage and replaced her with natural-looking background.

Sound familiar? That’s because it works like Photoshop‘s Context-aware Fill or Remove Background. Except now it works on moving video, not static images. So what used to take hours of rotoscoping happens in minutes.

But removal is just the beginning. You can also insert objects by drawing where you want them and describing what to add. Frame Forward generates the object and applies it across all frames contextually. Their demo showed a puddle that reflected a cat’s movement throughout the video.

That level of context awareness is remarkable. The AI doesn’t just paste an object across frames. It understands how that object should interact with existing elements in your scene.

Light Touch Reshapes Reality With AI Lighting

Project Light Touch gives you supernatural control over lighting in photos. You can change light direction, add illumination from lamps that weren’t on, and control diffusion of shadows.

The tool creates dynamic lighting you can drag across your image canvas. Light bends around people and objects in real time. Adobe’s demo showed a pumpkin being illuminated from within while the surrounding environment shifted from day to night.

Moreover, you can adjust the color of these manipulated light sources. Want warmer lighting? Done. Need vibrant RGB effects? Easy. The tool handles it all while maintaining realistic shadows and reflections.

This goes way beyond simple brightness adjustments. Light Touch understands how light physically interacts with scenes. So your edits look believable instead of obviously manipulated.

Clean Take Fixes Speech Without Re-Recording

Project Clean Take solves one of video editing’s most annoying problems. Someone mispronounces a word or delivers a line wrong? You used to need expensive re-recording sessions.

Not anymore. Clean Take uses AI prompts to change how speech is enunciated. You can adjust the delivery or emotion behind someone’s voice. Make them sound happier, more inquisitive, or completely replace words.

Plus, the tool preserves identifying characteristics of the original speaker’s voice. So changes sound natural, not robotic or obviously edited. That’s crucial for maintaining authenticity in your content.

Clean Take also automatically separates background noises into individual sources. You can selectively adjust or mute specific sounds while preserving overall audio quality. So you can remove that annoying hum without losing the atmosphere of your scene.

Other Wild Tools Adobe Demoed

Frame Forward and Light Touch are just the beginning. Adobe showcased several other experimental features worth mentioning.

Project Surface Swap instantly changes materials or textures of objects. Turn wood into metal. Replace fabric patterns. Swap surfaces with simple prompts.

Then there’s Project Turn Style, which lets you edit objects by rotating them like 3D images. No need to photograph items from multiple angles. Just rotate what you’ve already captured.

Project New Depths treats photographs as 3D spaces. The AI identifies when inserted objects should be partially obscured by surrounding environments. So edits look dimensionally accurate instead of flat and pasted on.

You can read detailed breakdowns of each sneak preview on Adobe’s blog. But the common thread is clear. These tools make complex editing tasks feel intuitive and fast.

When Will We Actually Get These Features?

Here’s the catch. Sneaks aren’t publicly available. And they’re not guaranteed to become official features in Adobe’s Creative Cloud or Firefly apps.

However, many current Adobe features started as sneaks projects. Photoshop’s Distraction Removal and Harmonize tools both began as conference demonstrations. So there’s decent chance some version of these capabilities will eventually launch.

Adobe uses Max to test reaction to experimental features. Positive reception can fast-track development. Lukewarm response might send tools back to the drawing board or kill them entirely.

Still, the fact Adobe is developing these tools signals their strategic direction. They’re betting big on AI-powered editing that reduces technical barriers. Making professional-grade work accessible to creators without years of training.

The Creative Implications Are Massive

These tools fundamentally change what’s possible for video editors and photographers. Tasks that required specialized skills now become point-and-click simple.

But that raises questions. When AI can automatically remove people from footage or manipulate lighting perfectly, does editing skill matter less? Will the barrier between amateur and professional content blur completely?

Maybe. But tools don’t replace vision. They just remove technical obstacles between imagination and execution. Great creators will use these capabilities to work faster and experiment more boldly.

The real winners are indie creators and small studios. Features that once required expensive specialists or hours of tedious work become accessible to anyone with Creative Cloud. That democratizes high-quality video production in meaningful ways.

Adobe’s sneaks show where creative software is heading. Faster, smarter, more intuitive. The future might not be here yet, but it’s getting really close.